Sports Performance Analysis Using PoseNet: Enhancing Athletic Training Through AI and Computer Vision

Table of Contents

- Sports Performance Analysis Using PoseNet: Enhancing Athletic Training Through AI and Computer Vision

- Introduction

- Objectives

- 2.1 General Objective

- 2.2 Specific Objectives

- Technology Stack

- 3.1 Frontend Technologies

- 3.2 Backend Technologies

- 3.3 AI and Machine Learning Tools

- 3.4 Optional Integrations

- Features of the System

- 4.1 Real-Time Pose Detection

- 4.2 Joint Angle Calculation

- 4.3 Movement Classification

- 4.4 Performance Scoring and Feedback

- 4.5 Progress Tracking Dashboard

- 4.6 Session Recording and Replay

- 4.7 Multi-Athlete Monitoring

- 4.8 Injury Prevention Analysis

- 4.9 Reporting and Analytics Export

- 4.10 Integration with Wearable Devices (Optional)

- Summary and Conclusion

Introduction

In the modern era of sports science and athletic development, data-driven decision-making plays a crucial role in improving performance, reducing injuries, and maximizing training efficiency. Coaches and trainers are increasingly relying on technology to measure and analyze athletes’ biomechanics in real time. One of the most promising technologies for this purpose is Pose Estimation — the process of detecting human body joints and postures from video or image data.

Among the most popular frameworks for pose estimation is PoseNet, a deep learning model developed by Google that can detect key body joints such as shoulders, elbows, knees, and ankles using a standard webcam or smartphone camera. It is lightweight, works on edge devices, and can run directly in a browser using TensorFlow.js, making it ideal for both professional and educational applications.

The proposed project, “Sports Performance Analysis Using PoseNet”, aims to create a system that automatically evaluates an athlete’s form and movement through computer vision. This system will identify body poses, analyze motion efficiency, detect inconsistencies, and provide feedback for performance improvement.

Through this project, students, coaches, and researchers can explore how AI and computer vision can be applied in sports analytics, biomechanics research, and physical education.

Objectives

The main goal of the project is to develop an AI-powered sports performance analysis tool using PoseNet to track and evaluate human movement in real time. Below are the specific objectives:

2.1 General Objective

To design and implement a sports performance analysis system that utilizes PoseNet for motion tracking and performance evaluation.

2.2 Specific Objectives

- To detect and visualize human body keypoints (such as shoulders, elbows, hips, knees, and ankles) using the PoseNet model.

- To analyze athlete movements and posture alignment based on captured keypoints and angles between joints.

- To measure performance metrics, such as movement accuracy, reaction time, body symmetry, and range of motion.

- To generate visual feedback or reports that guide athletes in correcting form errors or improving efficiency.

- To develop a user-friendly dashboard for coaches or trainers to monitor athlete progress and compare performance data over time.

- To promote AI literacy in sports science, encouraging integration of data analytics and machine learning in physical education and research settings.

By achieving these objectives, this project serves as both a technological innovation and a learning platform for exploring AI applications in the field of sports.

Technology Stack

To build a robust and efficient system for sports performance analysis, the project will employ a combination of AI frameworks, web technologies, and visualization tools.

3.1 Frontend Technologies

- HTML5 / CSS3 / JavaScript (ES6): For creating the web interface that displays live video feed and analysis results.

- TensorFlow.js: The JavaScript version of TensorFlow that allows PoseNet to run directly in a browser without needing a server-side GPU.

- Chart.js / D3.js: For displaying performance graphs, angle comparisons, and progress analytics.

- Bootstrap 5: For responsive and modern UI design.

3.2 Backend Technologies

- Node.js with Express.js: To handle API requests, manage sessions, and process uploaded data.

- Python (Flask/FastAPI): For advanced analytics, motion classification, and data processing tasks.

- OpenCV: For additional image preprocessing and video manipulation (frame extraction, motion smoothing).

- MongoDB / PostgreSQL: Database system for storing athlete profiles, performance scores, and session history.

3.3 AI and Machine Learning Tools

- PoseNet Model (TensorFlow.js / Python): For pose detection and keypoint extraction.

- NumPy / Pandas: For data analysis and performance metric computation.

- Matplotlib / Seaborn: For visual analytics and report generation.

- Scikit-learn / TensorFlow: For possible expansion into motion classification or injury prediction models.

3.4 Optional Integrations

- Cloud Storage (Firebase / AWS S3): For saving video recordings or user sessions.

- WebRTC: For real-time camera streaming and multiplayer (coach–athlete) sessions.

- Power BI / Google Data Studio: For data visualization and analytics dashboards.

This stack ensures the system is scalable, browser-accessible, and cross-platform, allowing it to work even on basic laptops or tablets.

Features of the System

The Sports Performance Analysis System using PoseNet offers a range of intelligent and interactive features to analyze motion and support athletic improvement. Below are the major system features and their corresponding functions.

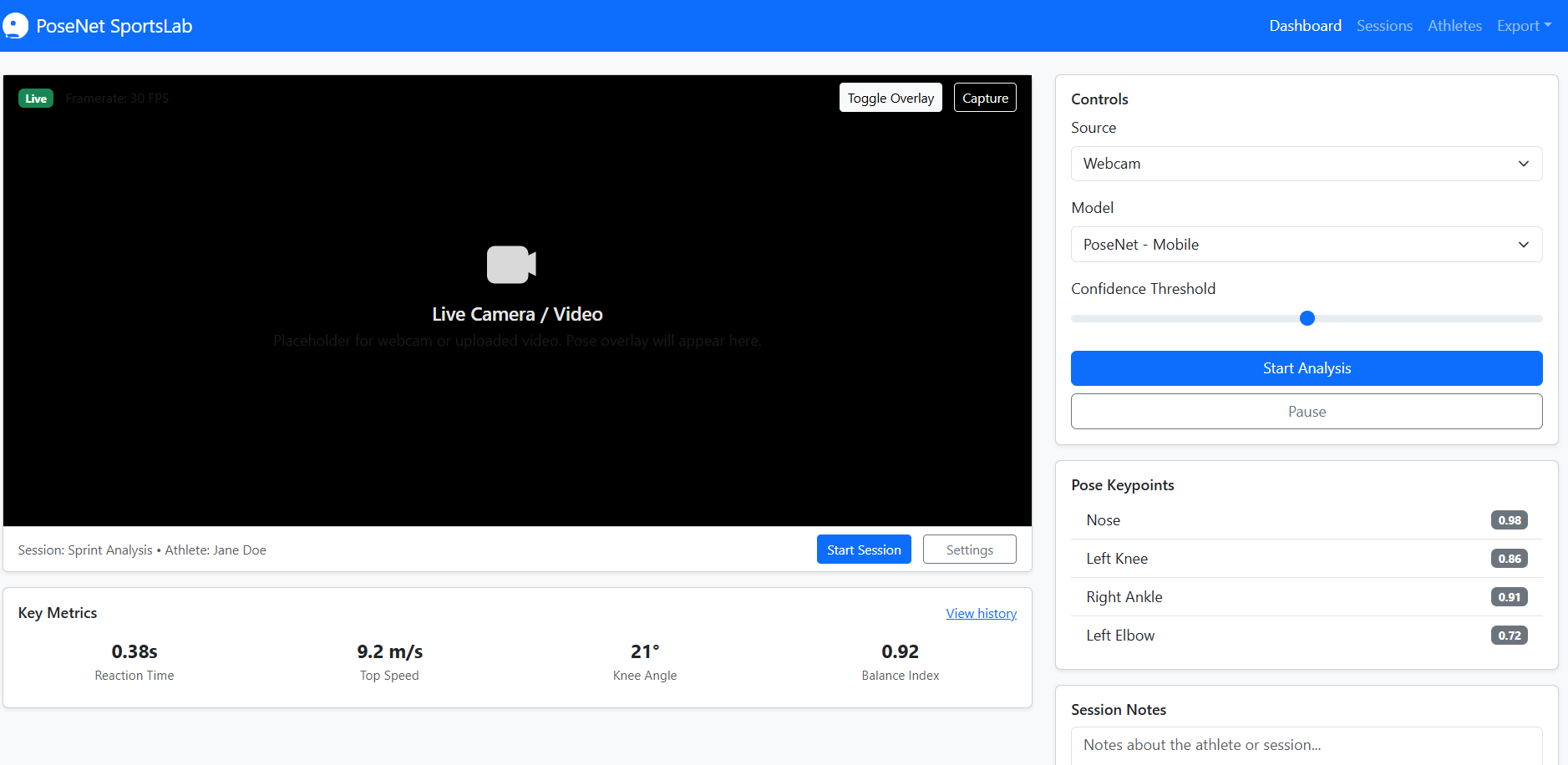

4.1 Real-Time Pose Detection

The system captures video input from a webcam or smartphone and uses PoseNet to detect 17 key body landmarks, including eyes, ears, shoulders, elbows, wrists, hips, knees, and ankles. These keypoints are mapped on-screen using lines and circles, forming a skeleton overlay on the athlete’s body.

This feature allows both athlete and coach to instantly visualize posture and motion flow during training sessions.

4.2 Joint Angle Calculation

The system computes the angles between body joints (e.g., knee flexion, elbow extension) to assess posture accuracy. For instance, a basketball player’s shooting form or a runner’s stride angle can be analyzed to determine optimal alignment.

This feature is essential for identifying inefficient movements that may lead to injuries or reduced performance.

4.3 Movement Classification

Using motion sequences and body joint data, the system can classify different types of movements such as squats, jumps, stretches, or punches. This classification helps track specific training routines and evaluate consistency over repetitions.

Machine learning models can later be trained on collected datasets to recognize specific sports actions automatically (e.g., “tennis serve,” “basketball shot,” or “golf swing”).

4.4 Performance Scoring and Feedback

After analyzing a movement, the system generates a performance score based on accuracy, stability, and symmetry. Real-time feedback can be displayed on-screen, such as:

- “Keep your back straight.”

- “Increase your arm extension.”

- “Good form — maintain posture.”

This instant feedback loop allows for immediate correction, improving athlete learning outcomes.

4.5 Progress Tracking Dashboard

A web dashboard will display each athlete’s recorded sessions, scores, and improvement charts over time. Coaches can view detailed metrics such as:

- Average joint angles

- Balance index

- Speed and reaction time

- Body symmetry ratio

This data-driven approach enables coaches to tailor training programs based on evidence rather than observation alone.

4.6 Session Recording and Replay

Each training session can be recorded for playback. The recorded video is synchronized with PoseNet keypoints, allowing frame-by-frame analysis of motion.

Athletes can compare their form across sessions and visualize their biomechanical improvements over time.

4.7 Multi-Athlete Monitoring

The system can be extended to handle multiple subjects in the frame, allowing team-level monitoring (e.g., tracking several runners or basketball players simultaneously).

PoseNet’s lightweight architecture supports multi-person pose estimation, making it ideal for group training environments.

4.8 Injury Prevention Analysis

By tracking body symmetry and repetitive strain patterns, the system can help detect abnormal movement patterns that may indicate fatigue or early signs of injury risk.

For example, if an athlete’s knee angle frequently deviates during squats, the system can alert the coach for possible imbalance issues.

4.9 Reporting and Analytics Export

At the end of each session, the system can generate an automated performance report summarizing:

- Detected actions

- Accuracy percentages

- Recommendations for improvement

Reports can be exported in PDF or CSV format for academic research, athlete profiling, or long-term documentation.

4.10 Integration with Wearable Devices (Optional)

For advanced users, the system can integrate with smartwatches or fitness bands via APIs (like Fitbit or Apple HealthKit) to collect complementary data such as heart rate or step frequency. Combining PoseNet visual data with sensor data creates a holistic picture of athlete performance.

Summary and Conclusion

The Sports Performance Analysis Using PoseNet project demonstrates how computer vision and AI can revolutionize the way athletes train and coaches evaluate performance. By using deep learning-based pose estimation, the system can accurately track human movement, assess posture, and provide actionable insights in real time — all without the need for expensive motion-capture hardware.

This project provides practical value not only in professional sports but also in academia, fitness centers, rehabilitation clinics, and educational institutions. It bridges the gap between technology and physical education, offering a low-cost, accessible platform for analyzing human motion.

From a research perspective, it opens opportunities for further studies in biomechanics, injury prevention, and personalized training systems. As AI models like PoseNet evolve into more advanced architectures such as MoveNet and BlazePose, this project can serve as a foundation for integrating multi-camera 3D motion analysis, real-time performance prediction, and automated feedback generation.

In conclusion, the integration of PoseNet into sports analytics highlights the potential of AI as an empowering tool in sports science education and athletic innovation. It encourages the next generation of developers, researchers, and coaches to harness the power of computer vision for precision performance improvement and smarter training methodologies.

You may visit our Facebook page for more information, inquiries, and comments. Please subscribe also to our YouTube Channel to receive free capstone projects resources and computer programming tutorials.

Hire our team to do the project.